| database: | GenBank | EMBL | DDBJ |

|---|---|---|---|

| maintained by: | NCBI (National Institute of Biotechnology Information, USA) | EBI (European Bioinformatics Institute, GB) | NIG (National Institute of Genetics, Japan) |

| access, search: | Entrez | SRS | getentry |

| URL: | ncbi.nlm.nih.gov | ebi.ac.uk | ddbj.nig.ac.jp |

Bioinformatics

A text developed for an introductory bioinformatics course at the University of Potsdam

version: 2022-09-08

Stefanie Hartmann

1 Bioinformatics?

You are studying to (possibly) be a scientist! You’re studying computer science or a natural science such as biology – but what is science? Science on the one hand is the body of knowledge that represents our current understanding of natural systems. So far in your studies, you probably have mostly had to learn and memorize parts of this body of knowledge. You had to remember facts and definitions and discover connections between them.

Science is also the process that continually extends, refines, and revises this body of knowledge. This is a more active aspect of science: you need to do something, apply knowledge or methods, interpret results, think about connections and hypotheses and possible causes, and come up up with strategies to test hypotheses. This aspect of science most likely hasn’t been too prominent in your studies so far, but it’s what this course is all about.

When you have worked through this chapter, you should understand why every biologist today is also a bioinformaticist. You should be able to give an example, in very general terms, of how computational analysis of biological data can be used to answer questions in biology.

1.1 So you’ll want to be a biologist?

You might want to be a biologist because you are interested in studying the natural world, and because you want to do basic or applied science. For this, you’ll need of course the current content knowledge of your field of study, and of related fields. This is what you have learned in your courses so far: biochemistry, molecular biology, physiology, genetics, ecology, evolution, and more. But as just mentioned, as a scientist you’ll also need skills! You need to be able to think critically, solve problems, collect data, analyze data, interpret data / results, collaborate and work independently, communicate results, read & analyze primary literature, and much more. Over the last several decades, our biological content knowledge has constantly increased – both in breadth and in depth. Accordingly, the tools that scientists commonly use have also changed.

If you had studied biology 100 years ago or so, maybe you would have studied organisms and their properties. By observing and describing them, you might have been interested in their taxonomy and classification, or in their evolution. Regardless, your tools most likely would have been paper and pen, and maybe a microscope, depending on the organism under investigation.

Around 70 years ago or so, the first ‘revolution’ in biology took place: with the development of molecular biology techniques, for the first time it was possible to study details about underlying mechanisms of life. The availability of means to study DNA and heredity, ATP and energy, or conserved protein and DNA sequences, for example, lead to a reductionist approach to study biology. The focus shifted, and biologists studied phenomena at the level of molecules and genes, of individual metabolic processes. The relevant tools were, for example, pipettors, agarose and polyacrylamide gels, PCR machines, and also increasingly computers.

The next revolution took place rather recently, maybe 15 years ago or so. It was the development of two fields in parallel that fed off each other: The technologies for high-throughput data acquisition (such as next generation sequencing), and also the development and improvement of information technology and the internet. The modern biologist, regardless of his chosen field of study, uses computers to manage and analyze data. If bioinformatics is the collection, storage, and analysis of biological data, then in 2020 every biologist is a bioinformaticist. Which is why you’re required to take this course if you’re a BSc biology student.

1.2 Biology today

Today, biological data is generally generated in an electronic format. Even if data is collected in the field, it is usually converted into an electronic format afterwards. And apart from the data you collect or generate yourself, there is an enormous amount of public data available online, and all of that is in electronic format. Many different data types and formats exist, and in this course you’ll work with the most commonly used ones.

Here are some examples of biological data that is commonly used in today’s research:

sequences: strings of nucleotides or amino acids

graphs (networks): metabolic pathways, regulatory networks, trees

small molecules: gene expression data, metabolites

geometric information: molecular structure data

images: microscopy, scanned images

text: literature, gene annotations

You will learn how to analyze some of these data types computationally. This includes learning about which data analysis tools exist and how they work, so that you can decide which tools are appropriate for a given data set or biological question. You will have to learn how these tools work in practice, i.e., what kind of data and which data formats they accept as input, and what the output looks like and means. Both of these aspects will be covered in this course.

To get started, I’ll focus on sequence data, which is currently the most abundant data generated by any of the high-throughput technologies. I’ll use sequence data as an example for learning computational data management and analysis skills, but later in the semester I’ll also cover protein structure and networks.

Sequence data is not just abundant, it is also relevant for just about any taxonomic lineage and biological discipline: Botany, zoology, microbiology, virology, evolution, ecology, genetics, cell & developmental biology, physiology, biochemistry, clinical diagnostics, pharmacology, agriculture, and forensics – they all use sequence data to answer questions in their respective fields.

Take a look at the research and publication sites of some of the biology groups at the University of Potsdam (https://www.uni-potsdam.de/de/ibb/arbeitsgruppen/professuren-ags): Regardless of whether they do field work or lab work, regardless of whether they do research on animals or plants, basic or applied science, they do computational data analysis to explore large and complex data sets, and to extract meaning from these data. Chances are, you’ll have to do computational data analysis for your bachelor thesis work.

Here are five (more or less) randomly selected articles that were recently published by groups working at the biology department here in Potsdam and their collaborators:

plant ecology, Prof. Lenhard, 2020: Supergene evolution via stepwise duplications and neofunctionalization of a floral-organ identity gene (https://doi.org/10.1073/pnas.2006296117)

marine biology, Prof. Wagner, 2021: The Microbiome Associated with the Reef Builder Neogoniolithon sp. in the Eastern Mediterranean (https://doi.org/10.3390/microorganisms9071374)

animal physiology, Prof. Seyfried, 2020: Antagonistic Activities of Vegfr3/Flt4 and Notch1b Fine-tune Mechanosensitive Signaling during Zebrafish Cardiac Valvulogenesis (https://doi.org/10.1016/j.celrep.2020.107883)

animal evolution, Prof. Hofreiter, 2020: Early pleistocene origin and extensive intra‑species diversity of the extinct cave lion (https://doi.org/10.1038/s41598-020-69474-1)

animal biology, Prof. Fickel, 2021: Genome assembly of the tayra (Eira barbara, Mustelidae) and comparative genomic analysis reveal adaptive genetic variation in the subfamily Guloninae (https://doi.org/10.1101/2021.09.27.461651)

You don’t need to read these articles in detail, but if you do skim them, you’ll find that they all use large amounts of sequence data to answer a biological question. Some of the data was generated in the context of the study: selected gene sequences, for example, or even entire organellar or nuclear genomes. Some data required for these analyses was already publicly available and had to be downloaded from online databases.

If you take a look at the methods sections of these articles, you’ll see that an important and large part of these studies was the computational analysis of data, and with a great number of different programs. For many of these analyses, sequence data was compared against itself or against public data for the purpose of assembly, identification of homologs, variant discovery, alignments and phylogenies, to assign taxonomic lineage, or to predict functions and effects on phenotype, for example.

These articles describe studies that were designed by biologists who were motivated by biological questions. They were interested in animal or marine biology, in plant or animal ecology, or in animal physiology of medical relevance. Using computer programs for data analysis wasn’t their goal, but it just happened to be what was required to get the job done. For these biologists, bioinformatics wasn’t a biological discipline itself, it was simply a toolbox. And that’s exactly how I’ll use bioinformatics in this course.

1.3 Bioinformatics as a toolbox

In this course, data and questions and motivation come from biology, and bioinformatics is only the toolbox for data analysis. This includes

algorithms (methods) for data analysis

databases and data management tools that make data accessible

presentation and visualization tools to help comprehend large data sets and analysis results

I will introduce and cover many different tools and show their relevance and application. In some weeks, the focus will be on analysis methods, and in other weeks the focus will be on their application. My goal is to provide an overview of the concepts, applications, opportunities, and problems in bioinformatics research today. You will gain practical experience with commonly used tools for biological research; some of them will be used only once, but a number of them will be used in multiple weeks, with different data and different objectives. If all goes as planned, in February of next year, you will be able to:

understand the principles and applications of important tools for biological data analysis

search and retrieve genes and associated information (sequences, structures, domains, annotations, ...) from science online databases

perform small-scale analyses of sequences and structures using online analysis tools

statistically describe and explore biological data, using the software package R

The specific schedule of topics will be given in the lecture.

These topics covered in this class are, however, only the tip of the bioinformatics iceberg! In one short semester, I can only cover the basics and selected topics. You might learn about additional bioinformatics tools and applications in other BSc or later in MSc courses, during your BSc thesis work, or when you’ll need them for a job or project. And some of the tools you will never learn about or use, and that’s ok, too.

2 Background: molecular genetics, sequence data

What is a gene? What is an intron? Which are the four bases found in DNA? What is meant by “complementarity of DNA” or “degenerative code”? What is an “open reading frame”? How are genomes and genes sequenced? If you don’t have problems answering these questions, you can skip this chapter. Otherwise: read on! This chapter presents a very condensed introduction to molecular genetics, although I start out with an overview of what early scientists knew about genes and inheritance even before it was clear that the molecule behind it all is actually DNA, deoxyribonuleic acid. I skip a lot of details that can be looked up in other textbooks. At the end of the chapter I provide a very brief overview of DNA sequencing. However, this is a rapidly developing field, and online information probably is a good supplement to this section. My main aim is to introduce the terms and concepts that will be important for later chapters.

When you have worked through this

chapter, you should be able to define the following terms and explain

the following concepts.

Terms: genotype, phenotype, DNA, allele, chromosome,

diploid, haploid, genome, exon, intron, transcription, translation,

(open) reading frame sequencing, sequence assembly, genome,

transcriptome

Concepts: gene vs. protein, nucleotides vs. amino

acids, complementarity of DNA strands, nuclear vs. organellar genomes,

eukaryotes vs. prokaryotes, first vs. next generation sequencing,

principles of sequencing by synthesis

2.1 Before the double-helix

2.1.1 It started with peas ...

It started with an Austrian monk, the famous Gregor Mendel (1822-1884), who crossed peas (Pisum sativum) and traced the inheritance of their phenotypes. A phenotype, or trait, is some characteristic that can be observed. The phenotypes of pea plants that Mendel investigated were for example, the color, size, or shape of peas, or the color of flowers or pea pods.

Mendel started with true breeding plants, these are called the parental (or P) generation. When he crossed parental plants that produced only green peas with those that produced only yellow peas, he noticed that the next generation (the F1, or filial 1 generation) produced all yellow peas and no green peas at all. However, in the following generation (the F2, or filial 2 generation), the green peas reappeared – but only at a ratio of 1:3 (Figure 2.2). This phenomenon of a trait not showing up in one generation but still being passed on to the next generation was observed for other pea phenotypes as well: crossing true breeding wrinkled peas and true breeding round parental peas produced only round F1 offspring. Again, in the F2 generation, Mendel observed for every three round peas also one wrinkled pea.

This is only a very small part of Mendel’s research results, and more on his experiments can be found in any introductory genetics textbook. For our purpose of defining a gene, I summarize the following:

the inheritance of a phenotype, or trait, is determined by “hereditary units” that are passed on from parents to offspring. These hereditary units are what are called ‘genes’ today.

alternative versions (green or yellow peas) of these traits exist. Today these alternative versions are called alleles. For each trait, an individual inherits two alleles: one from each parent.

other terms that are generally introduced in this context are the following, although they are not discussed further in this chapter or elsewhere in this book

dominant: a dominant allele is the one that is active (expressed) when two different alleles for a given trait are in a cell

recessive: an allele that is only active (expressed) when two such alleles for a given trait are present in a cell; when one dominant and one recessive are present, the dominant allele is expressed

homozygous: refers to having two identical alleles for a given trait, two dominant or two recessive alleles

heterozygous: refers to having two different alleles for a given trait, one dominant and one recessive allele

2.1.2 ... and continued with flies

Thomas Hunt Morgan (1866-1945) made the next important contribution by tracing Mendel’s hereditary units to chromosomes. Morgan and his students worked with fruit flies and not with peas, but their premise was the same: they crossed fruit flies Drosophila melanogaster with different phenotypes and traced their inheritance through the generations.

Among the traits that Morgan studied were the color of the flies’ eyes: red or white. He kept track of the flies’ gender and noticed that Mendels’ principles held up for his fly crosses as well – but that it was only the males (symbol: ) that ever had white eyes (Figure 2.3). The females (symbol: ) never ended up with white eyes.

Microscopic analyses had already revealed chromosomes, structures that occurred in pairs, but whose function was yet unknown. Thomas Hunt Morgan deduced that one member of each such homologous pair came from each of the parents of an organism. He also noticed that females always have identical pairs of chromosomes, but that males have one pair of unmatched (homologous) chromosomes: Fruit fly males have identical pairs of autosomal chromosomes (autosomes) but their sex chromosomes consist of one X chromosome that is inherited from their mothers and one Y chromosome that is inherited from their fathers. Females, in contrast, have the same set of autosomal chromosomes and a set of identical X chromosomes. X and Y chromosomes are the sex chromosomes, the ones that determine the gender of fruit flies and many other organisms. Morgan correlated the inheritance of eye color with the sex chromosomes and deduced that the hereditary unit responsible for the white and red eye colors may be located on these chromosomes.

Morgan and his colleagues studied many other traits in the fruit fly, and from their careful work they concluded that

genes are arranged linearly on chromosomes

genes that are near each other on the same chromosome tend to be inherited together

with an increased size between genes, there is increased recombination (the exchange of genetic material between pairs of homologous chromosomes, see below.)

Their research on flies revealed another very important aspect of how genes influence phenotypes. The traits studied by Mendel were determined by single genes, and today these kinds of traits are called monogenic. Although early geneticists believed that pretty much all traits were monogenic, Morgan found evidence to the contrary. Today we know that most traits are polygenic, also called complex traits: Multiple genes contribute to the phenotype. Moreover, environmental factors are generally also involved in the development of a complex trait.

2.1.2.1 A small digression: meiosis

Meiosis is the process by which gametes (i.e., sperm and egg cells in humans) are produced. Each of the two alleles for a given trait of Gregor Mendel’s peas or Thomas Hunt Morgan’s flies come from one of the parents. Before two parents can produce offspring, their gene set must be reduced in half – otherwise we’d have a doubling of genetic material after each new generation of offspring!

The process that reduces a diploid cell (i.e., a cell that contains two homologous copies of each chromosome) into four haploid gamete cells (i.e., four cells that each have only half the number of chromosomes) is called meiosis. It is is schematically shown in Figure 2.4. During meiosis, a crossing over between paired homologous chromosomes results in the exchange of genetic material, or recombination. This results in offspring having a genetic composition that is different from either parent.

2.1.3 One gene – one enzyme

The next important results for defining genes came not from genetics but from biochemistry. By 1910 or so it was clear that enzymes catalyze biochemical reactions: enzymes can, for example, convert one molecule into another by breaking down or synthesizing biomolecules.

George Beadle (1903-1989) and Edward Tatum (1909-1975) discovered in the 1940s that mutations in genes could cause defects in defined steps of metabolic pathways. The main conclusion from their work was that specific genes correspond to specific enzymes, and these enzymes are responsible for the reactions of specific steps in biochemical pathways. How did they come up with this result?

Beadle and Tatum worked with the bread mold Neurospora crassa that can easily be cultured in the laboratory. They mutated the bread mold by exposing it to radiation and then studied how the mutant Neurospora could not survive on a medium that wasn’t supplemented with specific essential nutrients such as vitamins.

Further biochemical and genetic analysis by Beadle and Tatum showed that they had mutated (“knocked out”) specific genes. These mutated genes could not carry out their normal functions in metabolic pathways, for example the synthesis of essential nutritional components. In order to survive, the bread mold therefore had to be given the specific compound that the functional protein would normally have synthesized from precursor molecules. This is schematically shown in Figure 2.5.

The result of Beadle and Tatum’s work was the famous “one gene, one enzyme” hypothesis, suggesting that one gene is coding for the information behind one enzyme.

2.1.4 It’s the DNA!

By the 1950s it had been established that chromosomes carry the hereditary information. It was also known that chromosomes contain two types of substances: proteins and nucleic acids. Alfred Hershey (1908-1997) and Martha Chase (1927-2003) performed a clever experiment to demonstrate that the genetic material was the nucleic acid (deoxyribonucleic acid, DNA), and not the protein.

Their study system was bacteriophages, which are viruses that infect bacteria: they attach to the bacterial membrane and inject their genetic material into bacteria, which results in the production of more viruses within the bacteria.

Hershey and Chase did two experiments in which they radioactively labeled either the protein of bacteriophages (Figure 2.6 A) or their DNA (Figure 2.6 B). They then showed that the radioactive phage protein remained outside, and the radioactive DNA was able to enter the bacteria. This finally resolved that the DNA on chromosomes was the genetic material responsible for Mendel’s “hereditary units”.

2.2 The famous double helix

After it was clear that DNA was the genetic material, it didn’t take long before the structure of this molecule was solved. Based on an X-ray photo of DNA by Rosalind Franklin (1920–1958), Francis Crick (1916-2004) and James Watson (1928) built a model of its 3-dimensional structure: DNA consists of two strands, the backbone of each is made up of pentose sugars (desoxyribose) and phosphates, in an alternating sequence (Figure 2.7). Covalently bound to the phosphates are the four different nucleotides: adenine, guanine, thymine, and cytosine. Biochemically, adenine and guanine are pyrimidines, and thymine and cytosine are purines.

Nucleotides of the two opposite DNA strands are non-covalently bound by hydrogen bonds: adenine always pairs with thymine, and there are two hydrogen bonds between them (dashed lines in Figure 2.7). Cytosine and guanine always pair, and between them there are three hydrogen bonds. This base pairing is also referred to as the ‘complementarity’ of DNA: Adenine and guanine complement each other, as do thymine and adenine. As a result, it is possible to deduce the nucleotides in one DNA strand if the sequence of the complementary strand is given:

ACGTATACGACTATCG

||||||||||||||||

TGCATATGCTGATAGCThe two strands of DNA have an antiparallel orientation, which means that their sugar-phosphate backbones are in opposite directions, as can be seen in Figure 2.7. The resulting asymmetric ends of the two DNA strands are generally referred to as 5’ (five prime) and 3’ (three prime). These numbers are derived from the carbons of the sugar rings in the sugar-phosphate backbone. The 5’ end of a DNA strand terminates in a phosphate group, and the 3’ end terminates in a sugar molecule. DNA sequence is generally written in 5’ to 3’ direction.

The four nucleotides are commonly represented by their first letters: A, G, T, and C. However, sometimes it is useful or necessary to be ambiguous about the nucleotide at a given position. For this purpose, one-letter codes for representing such ambiguity can be used. These are shown in Table 3.2.

| Symbol | Meaning | Origin of designation |

|---|---|---|

| G | G | Guanine |

| A | A | Adenine |

| T | T | Thymine |

| C | C | Cytosine |

| R | G or A | puRine |

| Y | T or C | pYrimidine |

| M | A or C | aMino |

| K | G or T | Keto |

| S | G or C | Strong interaction (3 H bonds) |

| W | A or T | Weak interaction (2 H bonds) |

| H | A or C or T | not-G, H follows G in the alphabet |

| B | G or T or C | not-A, B follows A |

| V | G or C or A | not-T (not-U), V follows U |

| D | G or A or T | not-C, D follows C |

| N | G or A or T or C | aNy |

Base pairing of complementary nucleotides in the DNA molecule was able to explain how genetic material can be copied: one strand serves as the template for the synthesis of the other. Furthermore, different phenotypes could be explained as occasional errors during the process of DNA replication that introduce mutations in one of the daughter copies of a newly synthesized DNA molecule. The newly discovered information lead to a refinement of the gene concept: Genes are the physical and functional units of heredity, and nucleotides on chromosomes are the physical location of genes.

2.2.1 The flow of information

DNA is the molecule for the storage of information, but enzymes (proteins) are the functional and structural workhorses of the cell. An intermediary molecule, ribonucleic acid, or RNA, plays an important role in the process of making a functional protein from the information stored in DNA. RNA is very similar to DNA, except that it is a single-stranded molecule, its sugar isn’t desoxyribose but a ribose, and instead of thymine it contains uracil (U).

The central dogma of molecular biology describes the flow of information in cells: DNA is copied (transcribed) into RNA. RNA is then translated into protein. However, DNA and RNA have four bases, whereas there are 20 amino acids that are the building blocks of proteins. How is the information in DNA encoded to make corresponding proteins?

The genetic code was solved in 1961 and explains how sets of three nucleotides (the codons) each specify translation into one amino acid (Figure 2.9 and Table 2.2). There are \(4^3 = 64\) possibilities of arranging four nucleotides into sets of three, but there are only 20 different amino acids. This means that some amino acids are encoded by more than one codon, and the genetic code is therefore degenerate: The amino acid tryptophan, for example, is only translated from the codon TGG, whereas alanine can be produced from either of four nucleotide triplets: GCA, GCC, GCG, or GCT.

To specify where the protein-coding region of a gene begins, and therefore where translation should begin, a designated start codon is used: ATG. Because this codon also codes for an amino acid, methionine, proteins usually begin with the amino acid methionine. In addition, there are three stop codons (TAA,TAG,TGA). These do not code for any amino acid and only indicate the position of translation termination.

| Amino Acid | 3-letter code | 1-letter code | Codons |

|---|---|---|---|

| Alanine | Ala | A | GCA,GCC,GCG,GCT |

| Cysteine | Cys | C | TGC,TGT |

| Aspartic Acid | Asp | D | GAC,GAT |

| Glutamic Acid | Glu | E | GAA,GAG |

| Phenylalanine | Phe | F | TTC,TTT |

| Glycine | Gly | G | GGA,GGC,GGG,GGT |

| Histidine | His | H | CAC,CAT |

| Isoleucine | Ile | I | ATA,ATC,ATT |

| Lysine | Lys | K | AAA,AAG |

| Leucine | Leu | L | TTA,TTG,CTA,CTC,CTG,CTT |

| Methionine | Met | M | ATG |

| Asparagine | Asn | N | AAC,AAT |

| Proline | Pro | P | CCA,CCC,CCG,CCT |

| Glutamine | Gln | Q | CAA,CAG |

| Arginine | Arg | R | CGA,CGC,CGG,CGT |

| Serine | Ser | S | TCA,TCC,TCG,TCT,AGC,AGT |

| Threonine | Thr | T | ACA,ACC,ACG,ACT |

| Valine | Val | V | GTA,GTC,GTG,GTT |

| Tryptophan | Trp | W | TGG |

| Tyrosine | Tyr | Y | TAC,TAT |

| Stop Codons | Ocr, Amb, Opl | Z | TAA,TAG,TGA |

Note: in some texts, the ‘first half of the genetic code’ refers to how a gene sequence is translated into a peptide sequence based on the nucleotide triplet code I just described. The term ‘the second half of the genetic code’ then refers to the rules that determine how a chain of amino acids folds into a functional protein.

2.2.1.1 Us and Them

Eukaryotes are organisms that contain the majority of their DNA in a special compartment within a cell, the nucleus. Eukaryotes can be unicellular or multicellular, and examples of eukaryotic organisms are animals, plants, or fungi. Their DNA in the nucleus is often called the “nuclear genome”. Eubacteria and archaebacteria (also referred to as Bacteria and Archaea), in contrast, are both prokaryotes: they do not contain their DNA in a special compartment within a cell.

In addition to their nuclear genome, Eukaryotes also contain one or two additional genomes: the organellar genomes. The mitochondria of all Eukaryotes contain their own DNA, as do the chloroplasts of photosynthetic Eukaryotes.

Of course, both eukaryotes and prokaryotes use DNA for storage of information, both use proteins for carrying out biochemical reactions, and transcription of DNA into RNA and translation of RNA into protein is carried out in eukaryotes as in prokaryotes. In eukaryotes, however, not all the transcribed information in an RNA molecule is actually used to produce a functional protein, and this extra material needs to be excised before the step of translation.

The regions in a DNA or RNA that actually make it into a protein are called exons. The regions that are spliced out are called spliceosomal introns. The RNA molecule that still contains both exons and introns is called pre-RNA or precursor RNA, and the reduced molecule is the mRNA or messenger RNA (see Figure 2.11 A).

Why are introns present in the DNA and the transcribed RNA if they don’t make it into the protein? Good question! For a while it was thought that introns are simply junk DNA that serve no purpose. It is now known that many introns contain information, for example, about the regulation of genes. The evolutionary origin of introns is an ongoing debate, and it has not been entirely settled whether prokaryotes once had introns and lost them, or whether introns and the machinery to splice them out was acquired only in the eukaryotic lineage.

A surprising discovery was the fact that a given DNA or pre-RNA sequence can give rise to more than one protein sequence – depending on which of the exons are used. The term alternative splicing refers to this fact, and an example is shown in Figure 2.11 B. Here, a pre-RNA sequence contains three exons. The final protein product may contain either all three exons, or the transcript may be alternatively spliced to give rise to a protein that only contains exons 1 and 3.

2.3 Hypothetical proteins

The availability of the genetic code, i.e., the ability to map amino acids of a translated protein to a sequence of nucleotides, lead to prediction of where genes could be. Based on where in a DNA sequence there are start codons, stop codons, and regions not interrupted by stop codons, the location of genes can be predicted. Of course, not every ATG in the genome codes for a start codon, and a lot of additional information is actually used to predict the location of genes in the genome!

Each DNA sequence has six so-called reading frames, or six possible ways to potentially make (read) a protein sequence: in the forward direction of a DNA strand, the first position of a codon could begin at the first position, at the second, or at the third, as shown below. A codon that starts at the fourth position is in the same reading frame as one that starts at the first position – it just has one less amino acid.

A protein could also be encoded on the complementary DNA strand, the one generally not shown but implicitly assumed. In order to get to the other strand and in order to write it into the same 5’ to 3’ direction, we just have to reverse and complement the original strand. Now there are again three possible reading frames for this strand, and that makes a total of six possible reading frames.

Although stretches of DNA that (potentially) code for proteins have six reading frames, they generally have only one open reading frame: one that is not interrupted by stop codons (indicated by asterisks) and could therefore potentially encode a protein sequence. Assuming that the DNA sequence below is taken from a stretch of protein-coding DNA, its open reading frame is most likely the second one, because it is the longest one and the one that doesn’t have one or more stop codons. As you can imagine, the presence of introns further complicates the matter of finding open reading frames and predicting gene locations.

forward

AGAATGGCTTAGCATCTCTGCAAAACCAACACAAAGCTATATTCTCTTAAAAATCAGTTCAACAAAAGAAGAACCTGAACACAG

1 R M A * H L C K T N T K L Y S L K N Q F N K R R T * T

2 E W L S I S A K P T Q S Y I L L K I S S T K E E P E H

3 N G L A S L Q N Q H K A I F S * K S V Q Q K K N L N T

reverse

CTGTGTTCAGGTTCTTCTTTTGTTGAACTGATTTTTAAGAGAATATAGCTTTGTGTTGGTTTTGCAGAGATGCTAAGCCATTCT

4 T V F R F F F C * T D F * E N I A L C W F C R D A K P F

5 L C S G S S F V E L I F K R I * L C V G F A E M L S H

6 C V Q V L L L L N * F L R E Y S F V L V L Q R C * A I Of course, just because a DNA sequence could potentially encode a protein doesn’t necessarily mean that it does. There is a lot more non-coding than coding DNA in eukaryotic genomes: only about 1% of our genomes, for example, codes for proteins. The other 99%, the “non-coding” rest, is made up of intergenic regions, introns. Here, “coding” refers to protein-coding, and RNA genes, which function without being translated into protein, are often called “non-coding (RNA) genes”. Examples include tRNAs, rRNAs, microRNAs, or snoRNAs.

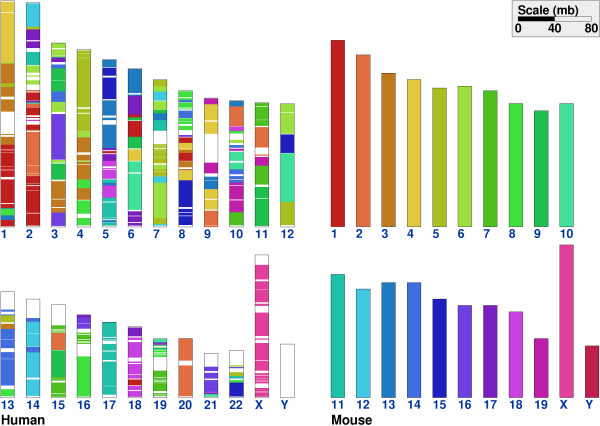

There are both computational and experimental approaches for verifying whether a predicted open reading frame corresponds to an actually expressed (functional) gene. However, even though the best evidence comes from wet-lab experiments, there is currently too much sequence data to allow for experimental verification of all genes. Computational prediction of genes is therefore an important task in bioinformatics, but it is beyond the topics I set out to cover here. Suffice it to say, gene finding is both computationally and biologically very difficult, and even two decades after human genome has been sequenced, scientists still don’t completely agree on the exact number of protein-coding genes in our genomes. And international collaboration publishes reliable gene sets for human and mouse at https://www.ncbi.nlm.nih.gov/projects/CCDS; as of this writing it lists of 27,219 predicted transcripts for 20,486 genes. These numbers are roughly comparable to those found in other eukaryotic genomes.

2.4 Sequencing genes and genomes

2.4.1 The first generation

Not long ago, researchers mostly sequenced and then analyzed individual genes: starting in the lab from genomic DNA or mRNA, they applied various molecular biology and biochemistry methods to identify the exact nucleotide sequence of genomic regions, genes, or gene fragments. The technology they used was developed in the 1970s by Frederick Sanger (1918-2013), and it is called “Sanger sequencing” after him, or “dideoxy” or “chain termination” sequencing. For obvious reasons, it is also referred to as the first generation of sequencing technology. It is based on single reactions of irreversible termination with fluorescence-labeled nucleotides. The resulting fragments are separated by size, and laser-induced fluorescence is then optically detected as it passes by the laser and is translated into bases. When converting the color and intensity of the fluorescence into a nucleotide, the analysis software also computes a quality score for each base that indicates how likely a base was called incorrectly.

Using Sanger sequencing, thousands of genes and genomic regions from a variety of species were sequenced and subsequently analyzed. Soon, also entire genomes were sequenced, although initially only rather small genomes, like that of a bacteriophage in 1977 or of the bacterium Haemophilus influenzae in 1995. The first eukaryotic genomes to be sequenced were that of two model organisms, the nematode Caenorhabditis elegans and the plant Arabidopsis thaliana. Both have relatively small genomes of about 100-135 million base pairs (Mbp). In 2001, an international and highly collaborative effort to sequence the approximately three billion base pairs (Gbp) of the human genome was completed, and a first version of our genome was published. This endeavor took about 13 years and cost about 2.7 billion US Dollars at the time.

2.4.2 The next generation

Sequencing using Sanger technology had been steadily improved and increasingly automated when, in the early 2000s, it reached a near-optimum with respect to sequencing cost and quality. Progress in technology as well as the increased potential for use of short-read sequences resulted in the development of “second” or “next” generation sequencing technologies. The first of these was pyrosequencing in 2005, followed quickly by Solexa/Illumina and SOLiD sequencing. These approaches differ with respect to template preparation, the sequencing reaction, and the image/data capture, but they all have in common that throughput is considerably increased and costs are dramatically reduced, compared to sequencing by irreversible chain termination. At least in theory and in highest-throughput settings, the $1000-Genome is possible with some of these technologies, although this price does not include the cost for data storage and analysis.

Compared to Sanger sequencing, important improvements of the Illumina technology, for example, include reversible termination of the synthesis reaction as well as the sequencing of millions of reactions in parallel. This is accomplished by clonally amplifying DNA templates that are immobilized on a a surface. As in Sanger sequencing, the signal detection is based on fluorescence-labeled nucleotides. One labeled nucleotide after the other is added to the reactions, and if it is complementary to the next base in a template DNA, it is incorporated. The intensity and location of the incorporated nucleotides is detected optically with high-resolution cameras. Software then processes the image files (that can easily be 100 Gb in size for a single run), resulting in the conversion of nucleotides and a quality score for each called nucleotide. A clear disadvantage of Illumina sequencing, in contrast to Sanger sequencing, is that the sequence reads are considerably shorter, between 75 and 150 bp, instead of around 800 bp.

The development of next-generation sequencing technologies is still ongoing, and some of the recent approaches are promising much longer reads. These include SMRT Pacific Biosciences (PacBio) or Oxford Nanopore Technologies (ONT) sequencing platforms, which can achieve a median sequence length of 8-10 kbp, with some sequences reportedly as long as 100 kbp. Look up some of these methods online: Review articles and results of internet searches will give you current and detailed information, and for all of the methods described, informative online videos are also available.

2.4.3 Sequencing principles

The basis of most sequencing technologies is DNA synthesis from a template, and the incorporation of a new nucleotide (dNTP) is a chemical signal that is captured and converted into a digital signal. For example, if a guanine is incorporated at a particular position during the reaction, we know that the corresponding base of the template is a cytosine. The detection of this signal is often done using fluorescence: each of the four nucleotides is labeled with a different fluorescent dye. Once the labeled nucleotides are incorporated into a new DNA strand, the color of the emitted light can then be directly translated into information about the corresponding base.

Exceptions to these aspects of sequencing are some of the aforementioned technologies for long-read sequencing: apart from being able to generate sequences that are several thousand nucleotides in length, they also employ a different biochemistry. Nanopore sequencing, for example, does not involve the synthesis of a complementary strand and instead achieves a direct readout of a DNA sequence.

Short-read technologies can only sequence a fragment of a gene or genomic region at a time, since the generated reads range in length from about 35 bp to 1,000 bp. Genes and genomes (chromosomes) therefore have to be assembled into longer contiguous regions (“contigs”) from multiple overlapping individual sequence fragments (Figure 12.6). Sequence assembly is very challenging, because of multiple biological and computational reasons, and the resulting genes and chromosomes are often fragmented and incomplete.

Figure 12.6 illustrates the principle of assembly using just a handful of sequence reads. Of course, for an entire genome sequence, this has to be done on a much larger scale! Every region on a genome, whether it contains genes or intergenic regions, is represented multiple times in the millions of sequence reads. This is shown schematically in Figure 2.13: the blue line corresponds only to a tiny fragment of a single chromosome. On the left side, an the approach of assembling overlapping reads into a contig that contains a protein-coding gene is shown. As mentioned above, gene finding methods are then applied to predict the location of exons and introns.

The right hand side of Figure 2.13 shows the approach of sequencing and assembling a so-called transcriptome, which only represents the part of a genome that contains protein-coding genes expressed (transcribed) during a particular time in a particular tissue. How is this accomplished? In the cell, mRNA is produced, which would then be translated into an amino acid sequence. In transcriptome sequencing, this mRNA is isolated and reverse-transcribed into c(omplementary)DNA. The DNA is subsequently prepared, sequenced, and assembled. One of the computational advantages is that much shorter regions (i.e., just genes, not long genomic regions) have to be assembled from this kind of sequencing data. However, this is also not trivial, and so both genome and transcriptome assemblies are often incomplete and highly fragmented.

Nevertheless, even imperfect genome data can be (and have been) used to answer a plethora of biological questions. The massive amounts of currently available sequence data have already transformed almost every biological discipline, and gene and genome data from both model organisms and non-model organisms are providing unprecedented insights into their biology and evolution. However, the handling and analysis of large amounts of high-throughput data requires bioinformatics expertise, and so almost every biologist today also has to be a bioinformaticist: welcome to the club.

2.5 References

Selected references for the material in this chapter:

Campbell NA (2016) Biology. Eleventh Edition. The Benjamin/Cummings Publishing Company Inc.

Gerstein MB, Bruce C, Rozowsky JS, Zheng D, Du J, et al. (2007) What is a gene, post-ENCODE? History and updated definition. Genome Res 17: 669-681.

Goodwin S, McPherson J, McCombie W. (2016) Coming of age: ten years of next-generation sequencing technologies. Nat Rev Genet 17, 333–351.

Zimmer C. (2018) She Has Her Mother’s Laugh: The Powers, Perversions, and Potential of Heredity. ISBN: 978-1509818532

3 Biological databases online

Online databases make different kinds of data available and easily accessible to scientists, educators, students, and the interested general public. Available data includes DNA and RNA sequences, protein structures, and scientific literature, for example. Online databases and analysis tools have become an important component of research activities in just about any biological discipline, and these resources have greatly changed the way research is done today.

An important purpose of databases is to make the data available in a format that a computer can read and further process. Keep this in mind as I offer in this chapter a broad overview of some commonly used biological databases. I will introduce what kinds of data online resources store, how they store it so that humans and computers can access and analyze it optimally, and how you can best search and use this flood of scientific information.

When you have worked through this

chapter, you should be able to define the following terms &

concepts. You should also be able to describe the type and content of

the listed online databases.

Terms & concepts: flat-file vs. relational

databases, primary/raw vs. annotated/curated data, genbank & fasta

format for sequence data, domain databases (organization, purpose)

Online databases: GenBank, UniProt (sprot, trembl),

PFAM, PDB, CATH

3.1 Biological databases: some technical information first

A search engine such as Google or DuckDuckGo returns any website it has indexed and that matches your search term(s). The results usually vary considerably in quality, format, language, and type, and they include professional and hobbyist web pages, news, images, video, and research articles, for example. When using a search engine, you search more or less the entire world wide web.

When you search an online database, in contrast, you search only through content that the administrators and curators of that database have included and prepared and formatted according to the database standards. There are online databases for literature, DNA and protein sequences, organismal relationships, metabolic pathways, and much more. Biology has become a data-intensive science for which large amounts of heterogeneous data needs to be stored, managed, made publicly available, and analyzed, and so online databases have become important tools for today’s biologists.

3.1.0.0.1 Early biological databases

As early as the 1980s, scientists saw the need to centralize biological sequence data, and to make this data available both for the research community and for computer analysis. The format for these early sequence databases was the “flat file”: separate plain-text files (i.e., without any formatting) for each entry. An example is shown in Figure 3.1. Here, the first two letters of each line indicate the type of information for that line, but these identifiers are not universal. You’ll see later that different databases use different of these identifiers, and/or different abbreviations for them.

Initially these types of databases worked well, but some problems soon become apparent. These included:

it was rather cumbersome to add new types of information: imagine that it becomes necessary to add the information “subcellular location”, for example, to each entry. Every single file would have to be altered to include the new line(s).

the manual entering and updating of information soon lagged behind the generation of new data

complex relationships between entries were very difficult to find and display

3.1.0.0.2 Newer biological databases

In order to address the problems just mentioned, current biological databases generally employ what is called a “relational database”. These have much the same purpose as the earlier implementations, but they easily allow searching for and retrieving complex information across multiple entries. These databases are computer readable but can be made human readable, and the updating and entering of data can be done very efficiently. On their websites, biological online databases display their entries in interactive HTML versions, and you’ll get to explore several of these later. For bulk download and large-scale analyses on local computers, most databases also offer this data in different formats, including text-based versions.

A relational database consists of multiple linked tables, and each table contains one type of information. Figure 3.2A shows a small example with just four tables: one for the sequence data, one for the organism from which the sequence was isolated, one for the journals in which the sequence or research results using this sequence were published, and one for its function and other description. Links (“relations”) between the two of these tables are shown in Figure 3.2B.

Each independent online database builds its own sets of tables (the relational database), plus an interface for searching and viewing the data. However, different data types in different databases are logically connected to each another. These connections between are established as (hyper)links: on the page for a given protein sequence, for example, you can find links to the relevant entries for DNA, literature, associated diseases, or conserved functional domains. Some of these links point to data on the same database, but several links usually point to an entry on a different database. We’ll come to some examples in a moment.

3.2 Online data

Online databases are important resources for any established or aspiring biologist. Maybe you have already done one or more of the following:

- looking up & obtaining

-

Online resources allow you to look up information: you can, for example, look up (and download!) the sequence or 3D structure for a given gene, find out if there are scientific publications associated with this gene sequence, to what class or family it belongs, or whether its function is known.

- comparing

-

Focusing again on sequences, online databases and web services allow you to compare a given sequence to other sequences and to find out whether similar sequences already exist in the database. If so, a more careful comparison might let you know if and where there are regions that are especially conserved, and thus possibly of functional importance.

- predicting

-

Furthermore, online resources allow you to make predictions: It may be possible to predict, for example, what a protein’s secondary or 3D structure is, or where a protein might be localized within a cell.

If you are interested in these or in any of the many other uses of online databases and web services, you need to know which database has the relevant data for a given question. You also need to know how the data is organized, and how different data types are logically connected to another. So read on!

3.2.0.1 Categories of databases: primary vs. secondary

How can we characterize online databases (and structure this chapter)? There are several options:

by the type of data they hold: DNA or protein sequences, protein structures, protein domains or motifs, human diseases, gene families, metabolic pathways, etc

by whether the data is ‘primary’ data that is submitted by scientists who sequence a gene or solve the 3D structure of a protein, or whether the data contains additional annotations, classifications, quality control, or is analyzed in any other way (‘secondary’ data)

by whether the database simply makes data available, or whether there are also online tools that facilitate the analysis of this data

by the organisms for which data the is available. Databases can be specific for data from plants or fungi, for example, or they may even contain data from a single organism only

by whether the database is publicly available without restrictions, or whether it is a commercial database

by the technical design of the database

The last two points are mentioned for completeness sake, but I’ll actually ignore them: all databases I cover here are publicly available, and I won’t talk about the technical designs of databases and just mention that differences exist. The first three ways of classifying databases are somewhat problematic: different resources may store both DNA and protein sequence, they may hold both primary and secondary data, and many offer access to both data archives and analysis tools. Despite the fact that these lines can be blurred, I describe databases based on the type of data they hold, and I’ll indicate whether this data is (mostly) primary or secondary data.

But what exactly is “primary” and “secondary data”? Primary data is pretty much the raw/original data that a researcher generates and then uploads to a database. DNA sequence data is an example of primary data, but I’ll also cover primary databases for 3D structures. Along with this data the researcher is required to provide what is called “metadata”: data about the data. This generally includes the organism from which the sequence was obtained, the name and affiliation of the researcher submitting the data, the technology with which the data was generated, etc. .

Secondary databases contain data obtained from primary databases, and these primary data are then organized, annotated, validated, integrated, consolidated, and checked for quality, completeness, or consistency. This is often done by human curators with a background in biology and in computer science, but in some cases it is done in an automated fashion by computers. Please note that data curation is distinct from data analysis:

I sequence gene X from organism Y, and I upload the sequence data as well as the required metadata to a primary database.

After some time has passed, a secondary database obtains this sequence for their next update. A curator checks whether someone else also has uploaded sequence X from organism Y before, whether the sequence has conserved functional domains, or whether someone else has solved the 3D structure of the corresponding protein, for example. They add this information as well as links to relevant entries in other databases and then publish the entry on the secondary database’s website.

You take data that you have generated yourself and/or that you take from primary and/or secondary databases. You analyze this data with appropriate methods, with the aim to test a hypothesis or to discover trends, patterns, or new information about a biological process or phenomenon. You publish the results and your interpretation in a journal or in your thesis.

3.3 Nucleotide sequences

DNA data stored in the databases we cover in this chapter has been processed from trace chromatograms (Sanger sequencing) or high-resolution image files (most next generation sequencing technologies). The processing of this raw data and the conversion into nucleotides data is called “base calling” and is done using computer software. Raw sequencing data takes up a lot of storage space and is available from designated databases such as the Trace Archive (Sanger sequencing) and the Short Read Archive (next generation sequencing technologies) at the National Institute of Biotechnology Information in the US. We will not cover these databases or aspects of processing the raw data.

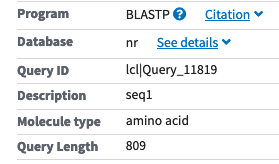

DNA sequence data in the most widely used primary databases contains a minimal amount of annotation and generally has not yet been curated. The three databases for nucleotide sequences are GenBank, the EMBL (European Molecular Biology Laboratory) database, and DDBJ (DNA database of Japan), summarized in Table 3.1.

Entries in these three databases are synchronized on a daily basis, so that within 24 hours any data submitted to NCBI, for example, can also be found in the EMBL database and DDBJ. However, although the three primary DNA sequence databases contain the same data, their interfaces and their software systems for searching and retrieving information are different (see Table 3.1). NCBI’s resources are probably more frequently used, so I’ll focus on these.

The volume of sequencing data has increased exponentially during the last 40 years, and managing this flood of data is not trivial. As of April 2020, there are 216,531,829 sequences with a total of 415,770,027,949 bases in GenBank. And that’s not all! The Whole Genome Shotgun (WGS) database contains incomplete genomes and chromosomes from eukaryotic and prokaryotic genome projects, and it currently holds 1,729,460,841 sequences with 8,162,441,243,179 nucleotides. This is publicly available information, and you can download it all. However, you’ll need about 1,142 GB of storage space on your computer just for the GenBank data...

Can you guess for which species the most sequence data is available in GenBank?

| entries | nucleotides | species |

|---|---|---|

| 1,942,470 | 172,374,368,927 | Triticum aestivum |

| 1,347,302 | 97,059,151,871 | Hordeum vulgare subsp. vulgare |

| 1,125,453 | 33,516,999,792 | Severe acute respiratory syndrome coronavirus 2 |

| 27,425,356 | 27,448,554,395 | Homo sapiens |

| 146,031 | 11,867,552,123 | Escherichia coli |

| 1,730,220 | 10,890,041,043 | Danio rerio |

| 10,028,841 | 10,457,481,544 | Mus musculus |

| 23,082 | 9,981,493,052 | Triticum turgidum subsp. durum |

| 4,219,148 | 7,410,260,496 | Zea mays |

| 21,522 | 6,749,235,260 | Secale cereale |

Focusing on our own species in this list: Do you know how many basepairs are in the human genome? – There are about 3.3 Gbp in a haploid human genome. And how many protein-coding genes do we have? – About 20,000. So regardless of whether the human data in GenBank predominantly consists of genomic fragments or of gene sequences: there is redundancy in this database. A case in point is the human tumor suppressor gene BRCA1, for which there are currently about 3,000 separate entries in GenBank. And that’s not even counting the other primary data repositories and all the still unpublished data of human genes and genomes. For a list of all DNA databases available just at NCBI, see https://www.ncbi.nlm.nih.gov/guide/dna-rna/.

For the purpose of efficient management, distribution, and analysis, the DNA data in GenBank, EMBL, and DDBJ is organized into different “divisions”. Current GenBank divisions group sequences based on taxonomy and on sequencing strategy: taxonomic divisions include BACT (bacterial sequences), PRI (primate sequences), ROD (rodent sequences), and PLN (plant sequences), for example. A large proportion of the data in GenBank is in the high-throughput divisions, these include sequence data from genomic data in the division HTG (high-throughput genome sequences) and from cDNA data in the division EST (expressed sequence tags). Once the low-quality sequences from high-throughput divisions have been ordered, quality-filtered, and/or re-sequenced, they are moved to the appropriate taxonomic divisions. See https://www.ncbi.nlm.nih.gov/genbank/htgs/divisions/ for a list of all GenBank divisions.

Here is where it might get confusing: GenBank is a primary database at NCBI; for management purposes, it is divided into divisions. Most GenBank divisions can be accessed through the “Nucleotide” database with the Entrez search system the ‘Nucleotide’’ database search function at NCBI searches GenBank. However, it also searches other databases, such as the RefSeq database that consists of curated GenBank entries (https://www.ncbi.nlm.nih.gov/refseq/). See Figure 3.3 for a visual of the “Nucleotide” searches.

3.3.0.1 A note on where the sequences come from

Sequences that are currently available in the online nucleotide databases are submitted directly by scientists or by genome sequencing groups. Sequences that are taken from the literature and patents are also stored here. The sequence data in GenBank varies greatly with respect to quality and annotation, and I briefly describe the main type of projects from which GenBank data is derived:

Individual research projects. Researchers that study (for example) specific metabolic pathways, signal transduction cascades, physiological responses, or developmental processes frequently study, isolate, and sequence the genes involved. They often characterize these genes and gene products very well on the biochemical, genetic, molecular, and/or structural level. Sequences submitted as a result of these studies are therefore usually annotated with an experimentally-derived function and other sequence features. They also are generally full-length sequences, i.e., they span the entire cDNA (or genomic) region of a protein-coding gene.

Large-scale projects. Genome projects aim to provide contiguous sequences of the chromosomes that make up a genome. Expression studies (transcriptome projects) aim to provide an inventory of genes that are expressed at a particular time or under a set of conditions. Both of these large-scale sequencing projects provide a lot of sequence data, for which experimental verification and functional annotation is generally not available. Often times, the only annotation associated with these sequences is the source organism. In addition, sequences generated in the context of a large-scale project are often unfinished when they are submitted to the database: they may be short, of low quality, and incomplete (ie., only gene fragments).

Environmental samples. Metagenomics is the genetic study of entire microbial communities: samples are taken from the environment, for example from ocean water, soil, or the human gut. DNA from the microorganisms in the samples is then extracted and directly sequenced, without first culturing the microbes in the laboratory. As a consequence, the source organisms of the sequences obtained might be unknown or even incorrectly assigned when they are submitted to the database.

3.3.0.2 Sequence entries at NCBI’s GenBank

Despite the problems of redundancy, incomplete gene sequences, and error, GenBank (as well as the other two primary databases mentioned) a tremendously useful resource. Let’s look at the structure and content of a typical GenBank entry.

A typical sequence entry at NCBI has three main sections: a header, features, and the DNA sequence (Figure 3.4). The header contains the unique ID of the entry, plus other brief descriptions of the sequence and from which organism it was isolated. We come back to the header in a moment. The next section describes the features of the particular sequence. A lot of different information can (but doesn’t have to) be listed here: if it is known where introns and exons are for genomic sequence, this is where you’ll find it. Other details, such as the presence and location of a mutation, of a binding site, or of a transit peptide can also be shown in this section. The third and final section gives the DNA sequence.

I mentioned at the beginning of this chapter that one of the purposes of databases is to make data available in a format that a computer can read. I’ll illustrate this using the header of a GenBank entry as an example.

Figure 3.5 shows the header of a sequence that was isolated from a plant, the lupine Lupinus luteus. The very first line starts with the word “LOCUS” in capitalized letters. You can read that the entry name for this sequence is X77043. The next item on the line lists the length of this particular sequence (836 base pairs), that it was isolated as mRNA and is a linear molecule (as opposed to, for example, a circular mitochondrial genome sequence). As mentioned above, sequences are labeled as belonging to one of several divisions, and the sequence shown here belongs to the plant division (PLN). Finally, you can read on the first line of this entry that it was entered or last modified on April 18 in 2005.

You can read and process this information, and you would have no problem if the order of the last two items (division and entry date) were switched, for example. Similarly, no human would have a problem finding out how long the sequence is if the information “836 bp” were shifted to the left by a few character positions – or even placed in a different relative position on this line.

For a computer, however, this makes much more of a difference. So that all entries can be automatically read and processed by a computer program, all information on this line is given in a precise order and at specific positions. The locus name, for example, always begins at the 13\(^\text{th}\) position of the first line. The number of base pairs in the sequence invariably ends in position 40, and the letters ‘bp’ are in positions 42 and 43.

The next line, the Definition, gives a brief description of the sequence, starting with the common name of the organism from which the sequence was isolated. If a function of the sequence or a gene name is available, this information is given here as well. The rules for this information, to make it computer-readable, is that it begins with the word ‘DEFINITION’ in capitalized letters, can span multiple lines, but invariably ends with a period.

Next comes the accession number, which is a stable identifier of the sequence in the entry. Even if the sequence changes, for example because an error was discovered and corrected, the accession number won’t change. However, you still can access the original sequence because the accession numbers are versioned: the line ‘VERSION’ gives the IDs of updated versions of an entry. These are called compound accession numbers.

If, for example, the lupine sequence with the accession X77043 changes for whatever reason, its compound accession number on the ‘VERSION’ line starts as X77043.1. If another change becomes necessary, the compound accession number would become X77043.2. This allows the individual user and other databases to access sequence data as it changes over time.

The final information in the header of a GenBank entry contains more detailed information about the source organism and, if available, a scientific publication associated with the sequence. One way to get to the corresponding protein sequence of a DNA entry (if it’s protein-coding), is the link after “protein_id” under “CDS”, listed under “FEATURES”.

The complete information of a sample GenBank entry can be found at the NCBI’s website (http://www.ncbi.nlm.nih.gov/Sitemap/samplerecord.html)

3.3.0.3 Other sequence formats

The GenBank format as described above provides a lot of information. But what if you don’t need all this information and just want the sequence? There is another very frequently used format for saving sequences, called ‘fasta’. It always starts with a ‘\(>\)’ sign, followed by a description of the sequence on a single line. On the next line, the actual sequence starts:

>gi|441458|emb|X77043.1| Lupinus luteus mRNA for leghemoglobin I (LlbI gene)

AGTGAAACGAATATGGGTGTTTTAACTGATGTGCAAGTGGCTTTGGTGAAGAGCTCATTTGAAGAATTTAATGCAAATATTCCTA

AAAACACCCATCGTTTCTTCACCTTGGTACTAGAGATTGCACCAGGAGCAAAGGATTTGTTCTCATTTTTGAAGGGATCTAGTGA

AGTACCCCAGAATAATCCTGATCTTCAGGCCCATGCTGGAAAGGTTTTTAAGTTGACTTACGAAGCAGCAATTCAACTTCAAGTG

AATGGAGCAGTGGCTTCAGATGCCACGTTGAAAAGTTTGGGTTCTGTCCATGTCTCAAAAGGAGTCGTTGATGCCCATTTTCCGG

TGGTGAAGGAAGCAATCCTGAAAACAATAAAGGAAGTGGTGGGAGACAAATGGAGCGAGGAACTGAACACTGCTTGGACCATAGC

CTATGACGAATTGGCAATTATAATTAAGAAGGAGATGAAGGATGCTGCTTAAATTAAAACGCATCACCTATTGCAATAAATAATG

AATTTTATTTTCAGTAACACTTGTTGAATAAGTTCTTATAAATGTTGTTCAAAATGTTAATGGGTTGGTTCACATGATCGACCTT

CCCTTAATGACAACATAATTCAGTTCGAAATTAAGGATATCTTAATATTATATGTACTTCCACTACAAATCCTTGCTGAGGTTGG

TGGTTTGTGTTAGCCTTTAAATTGGGAGAGTCTCCCTTAAGTTAAACTTTTCTTATAATAAATAAATATTATTTAAATAAGCTCA

TTGTTTGGAAGGTTTACACTATTTAATGATGGAATGCGATATATTATTATAAAAAAAAAAAAAAAAAAAAAThis format takes up a lot less space on a computer, but it also has less information about the sequence. Especially when working with large amounts of sequence data it is often worth considering whether the fasta format may be sufficient for your needs. For most of data analysis you’ll do in this course, a fasta file is needed.

3.4 Protein sequences

Proteins can be sequenced directly, using Edman degradation or mass spectrometry. Several public databases contain data from mass spectrometry experiments, for example the Peptide Atlas (http://www.peptideatlas.org/). Protein sequence data we cover in this chapter, however, has generally not been sequenced directly but instead has been inferred indirectly, namely by computationally translating protein-coding DNA sequences. These predicted protein sequences are stored in secondary databases, along with some additional functional annotation and links to other databases.

3.4.1 UniProt

A secondary database for protein sequence is UniProt, the Universal Protein Resource, at http://www.uniprot.org. It is a collaboration between European Bioinformatics Institute, the Swiss Institute of Bioinformatics, and Georgetown University. UniProt stores a wealth of information about protein sequence and function and makes this data available in subsets:

- UniProt Knowledgebase (UniProtKB)

-

contains extensive curated protein information, including function, classification, and cross-references to other databases. There are two UniProtKB divisions:

Swiss-Prot is a manually curated set of entries: These contain information extracted from the literature and curator-evaluated computational analyses. Annotations include a protein’s function, catalytic activity, subcellular location, structure, post-translational modification, or splice variants. The curator resolves discrepancies between sources and creates cross-references to primary and other secondary databases.

TrEMBL is a computer-annotated set of entries. These will eventually be manually curated and then moved to the Swiss-Prot division.

- UniProt Reference Clusters (UniRef)

-

combines closely related sequences into a single record. Sometimes it is important (and faster) to work with only a single sequence of rbcl, the ribulose bisphosphate carboxylase, and not with all \(>\)30,000 of them that are available in UniProt.

- UniProt Archive (UniParc)

-

this section contains the different versions of entries and thus reflects the history of all UniProt entries.

Similar to GenBank entries, there are HTML and text-only versions for each Uniprot entry. Examples are shown in Figure 3.6. The HTML version is easily read by humans and contains many cross-links to other online resources. The text-only version is suitable for automatic processing by computer programs, and the ID lines all start with two capital letters and fulfill the same purpose as the identifiers of the NCBI files described earlier.

3.5 Motifs, domains, families

Protein sequences contain different regions that are important for the function of the protein and thus evolutionarily conserved. These regions are often called motifs or domains. Proteins can be clustered (grouped) into related families based on the functional domains and motifs they contain, and this type of information is the basis for many online resources.

A given sequence is represented exactly once in a primary sequence database and the UniProt resource. Take, for example, the protein TPA, tissue plasminogen activator, which is a protein that converts plasminogen into its active form plasmin, which then can dissolve fibrin, a protein in blood clots. To carry out this function, the TPA protein requires the presence of four different functional domains, one of which is present in two copies (Figure [tpa]).

GenBank has (at least) one entry for the TPA sequence from humans, one entry from mouse, plus entries from other organisms for which this gene has been sequenced. Each of these entries contains the information discussed above.

Similarly, for each TPA sequence from a different organism there is a single UniProt entry that describes in detail the function of this protein. Since the TPA sequences from human and other primates are highly similar in sequence, they are found in a common UniRef cluster of sequences with 90% or more sequence identity.

In a database of functional domains, in contrast, the TPA is listed in multiple entries: A protein sequence can be found in as many different domain family entries as it has recognizable functional domains. This also means that the information on a given entry of a domain database may not pertain to the entire protein, but only to the particular domain.

3.5.1 Prosite

The Prosite database (https://prosite.expasy.org/) of the Swiss Institute of Bioinformatics uses both profiles and patterns (regular expressions) to describe and inventory sequence families. Prosite’s goal is to group sequences that contain regions of similar sequence, and thus similar function, into families. In addition, it allows the user to quickly scan a protein sequence for conserved motifs. Here is an example to illustrate how Prosite works:

Zinc fingers are structural and functional motifs that can bind DNA and are therefore often found in transcription factors, which, by binding to DNA, regulate the transcription of genes. Zinc fingers assume a certain 3D structure, are relatively short, and some of the amino acid positions in the domain are always occupied by the same amino acids. Multiple such motifs are often present in a single protein. A canonical zinc finger motif is shown in Figure 3.7, in which each filled circle represents an amino acid.

If you were to describe this particular sequence in words, you might come up with something like: a C followed by any 2 to 4 letters followed by C followed by any 3 letters followed by one of L, I, V, M, F, Y, W, or C followed by any 8 letters followed by H followed by any 3 to 5 letters followed by H.

This description does the job of defining a the zinc finger motif, but it certainly is awkward. Prosite does better: it uses regular expressions to specify motifs.

In the regular expression syntax of the Prosite database, one-letter

codes for amino acids are used, and the lower-case letter ‘x’,

represents any amino acid. Parentheses indicate the range or

exact number of amino acids at a given position. Amino acids are

separated by the ‘-’ sign. The description “a C followed by any

2 to 4 letters” would therefore be translated into the Prosite regular

expression “C-x(2,4)”

Sometimes one of several amino acids are allowed at a single position

within the motif. In this case, all accepted amino acids are listed

within brackets. Thus, “one of L, I, V, M, F, Y, W, or C” would be

translated into the Prosite regular expression

“[LIVMFYWC]”.

Putting all this together, you can describe the zinc finger motif

with the following Prosite regular expression:

C-x(2,4)-C-x(3)-[LIVMFYWC]-x(8)-H-x(3,5)-H

If you had a sequence of unknown function, you could submit it to the Prosite website to scan it for a match to the zinc finger motif: the sequence shown below would return a positive result; the motif is underlined.

ITLLEQGKEPCVAARDVTGRQYPGLLSRHKTKKLSSEKDIHDISLSKGSKIEKSKTLHLKGSN

EGQQGLKERSISQKIIFKKMSTDRKHPSFTLNQRIHNSEKSCDSNLVQHGKIDSDVKHDCKST

LHQKIPYECHCTGEKS CKCEKCGKVFSHSYQLTLHQRFHTGEKP YECTGEKPGKTFYPQL

KRIHTGKKSYECKECGKVFQLVYFKEHERIHTGKKPYECKECFGVKPYECKECGKTFRLSFYL

TEHRRTHAGKKPYECKECGKFNVRGQLNRHKAIHTGIKPF

How does Prosite actually scan a sequence for known motifs? The syntax I just introduced is only the human-readable form of a motif description. Internally, the syntax presented in the chapter on regular expressions is used by the software.

Regular expressions are a powerful and efficient approach for describing patterns, but they also have limitations. Take, for example, the alignment of seven sequences in Figure 3.8, in which shaded amino acids indicate conserved columns. Although all seven sequences match the zinc finger regular expression, the topmost sequence is very different from the other six sequences. This illustrates that regular expressions cannot incorporate information about the relative frequency of amino acids within a motif, for example.

3.5.2 Pfam

The most widely used domain database is probably Pfam. It is maintained by a number of international research groups and can be accessed at http://pfam.sanger.ac.uk. Its goals are to cluster (group) functionally and evolutionarily conserved protein domains into families, and to make these available to the scientific community.

Pfam describes domains and motifs in a way that captures the conservation of different alignment columns. The basis for these computations are Hidden Markov Models, the details of which are beyond the scope of this text. Essentially, a statistical model of sequence conservation from a representative set of sequences for a given domain is computed. Using this model as a basis, additional sequences that belong to this family can then be identified and added to the alignment.

Some domains occur in many proteins and/or in all domains of life, and the corresponding Pfam domain families are made up of hundreds or even thousands of sequences. Other domains have been identified in only a few sequences and/or species (so far). Regardless of the size of a given domain family, Pfam offers for each one of them a variety of information:

a summary description of the protein domain’s function

a list of organisms in which the protein domain has been identified

if available, a list of the solved 3D structures of proteins that contain the domain

a list of architectures in which the domain occurs, i.e., with which other domains together a given domain has been found in proteins

a multiple sequence alignment. The use and generation of multiple sequence alignments are covered in detail in Chapter 9.

a Hidden Markov Model (HMM), which is basically a statistical description or model of the multiple sequence alignment and captures the conservation of sequences that are members of a domain family. HMMs are computed using the software HMMER (http://hmmer.janelia.org) and also serve to assign new sequences to the existing domain families. The entire Pfam database is based on this software.

and much more

3.5.3 InterPro

Prosite and Pfam contain similar data, but the corresponding web sites have different interfaces, the domains and motifs are represented in very different ways, and both have their own strengths and weaknesses. Considering that these are just two of many available motif and domain databases, a confused user might ask which one of these to visit and use. Fortunately, there are some efforts to integrate databases with similar and overlapping information. InterPro is the result of such an effort.

InterPro combines the information of a number of different motif and domain databases in a single location, and Prosite and Pfam are among these member databases. InterPro can be accessed at http://www.ebi.ac.uk/interpro. Users can search InterPro entries for text (keywords, gene identifiers), and the InterProScan functionality allows to query a sequence against InterPro, basically searching all member databases simultaneously for matches to a sequences.

3.6 3D-Structures

3.6.1 PDB